SD-WAN FEATURED ARTICLE

The Need for Disaggregated Cloud Infrastructures

Today’s data infrastructures, whether on-premises or in-the-cloud, are typically based on general-purpose architectures that utilize a pre-configured allocation of compute and storage resources to meet a variety of application workload demands. As these data-intensive applications proliferate on a larger scale, one-size-fits-all infrastructures come up short relating to performance, capacity and scalability, and are challenged to meet varied application requirements. As such, a newer, more flexible approach to resource allocation is required to advance business efficiencies.

The need to disaggregate compute and storage resources is now an IT priority so that organizations can stretch their dollars and improve the utilization of hardware resources. To enable these gains through optimal application deployment, a critical imperative is to enable compute and storage resources to scale independently of each other. Through abstraction, provisioning and improved flash SSD management, specialized software that operates on the storage node itself is required to take full advantage of the high-performance and networked Non-Volatile Memory Express (NVMe™)-SSDs that comprise the storage resource pool. The shared storage resources can also be connected to automated container orchestration frameworks using a RESTful application programming interface (API).

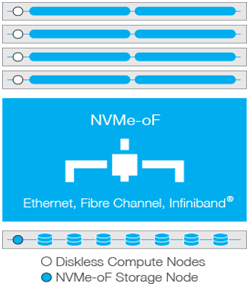

Storage software now can use a networked protocol that disaggregates storage from the compute nodes is needed, and NVMe over Fabrics (NVMe-oF™) has quickly become the protocol of choice. It is streamlined, high-performance and low-latency, making it a valuable component to architect a more modern, agile and flexible data center.

Industry Challenge

Existing data centers typically utilize direct-attached storage (DAS) for cloud deployments (Figure 1) as DAS is currently the most popular storage architecture deployed due to its low cost and simplicity. Embedding storage into servers as part of a DAS model also improves overall performance and data access. The DAS trade-off, however, is that the CPU and storage resources are tightly locked together in the environment, limiting capacity and resource utilization that typically drives storage overprovisioning, and an increase in storage spending and total cost of ownership (TCO).

Data center architects who develop cloud infrastructures must take additional design steps to overcome many of the limitations of DAS, such as addressing underutilized SSDs and CPUs in the server that need more storage performance or capacity. Overprovisioning the entire data center to accommodate peak workloads in the cloud infrastructure is expensive and has limited the ability to perform elastic scaling, or where deployed, leads to resource underutilization (except under peak workloads).

Storage Disaggregation in the Cloud

Solving the IT resource disaggregation challenge has gotten a bit easier thanks to the widespread adoption of two key technologies: (1) 10/25/100 Gigabit Ethernet (GbE) network connections and any high performing network protocol; and (2) the NVMe-oF specification that enables flash-based SSDs to communicate over a network, delivering nearly the same high-performance, low-latency benefits as if the NVMe-based SSDs were locally-attached, or under some workloads, delivering even better performance.

NVMe-oF is designed to address the inefficiencies associated with the DAS architecture by disaggregating high-performance NVMe SSD resources from the compute nodes and making them available across a network infrastructure as network-attached shared resources. Pooling provides the ability to provision the right amount of storage or compute for each application workload, on each server within the data center. Pooling borrows compute resources from lower priority applications during peak workload demands when integrated with automated orchestration frameworks (such as Kubernetes® and OpenStack®). While this approach is similar to other block storage technologies, such as iSCSI, resources are accessed at much lower latencies using NVMe-oF pooled flash storage for sharing by different hosts.

Benefits of Disaggregation

Moving from a DAS-based storage architecture to a disaggregated, cloud-based shared storage model (Figure 2) presents several benefits and provides dramatic increases in CPU and SSD utilization that eliminate the need to overbuild or overprovision resources to meet peak application workload demands. This separation of storage resources from the compute servers provides a wide range of financial, operational and performance benefits that align well when moving to a cloud infrastructure. Improvements include:

- Capacity Utilization:

The ability to separate storage resources from the server enables the right levels of capacity to be applied to each compute node and each workload, reducing the need to overprovision storage in each server.

- Server Utilization:

As compute needs vary across applications over time, expensive CPU resources may become stranded. Disaggregation provides the flexibility to allocate low-latency flash to I/O-intensive applications using far fewer compute nodes for processing. The combination of disaggregation and NVMe-oF attack the stranded resource problem by improving overall CPU utilization, maximizing storage capacity without overprovisioning, and implementing additional compute nodes when required.

- Latency Performance:

Disaggregation via NVMe-oF keeps latency virtually indistinguishable from direct-attached drives and abstracts the physical drives into a high-performance pool that enables flash to be shared among workload instances.

- Web-Scale Opportunities:

Disaggregation also allows for the creation of more powerful, web-scale clouds where massive pools of cloud-computing resources can be made available within the data center, used and shifted based on need or service delivery policies across specific stateless, stateful and batch applications.

- Space, Power and Cooling Requirements:

With disaggregation, resource utilization rates will soar, reducing the need for more space, power or cooling requirements, which reduces storage spending and TCO.

- Live VM Migration without Moving Data

In a software-defined data center, virtual machines (VMs) frequently need to move from one physical server to another for various reasons (load-balancing, maintenance, etc.). In a disaggregated architecture, data can remain in its protected location while the VMs migrate to other locations.Just moving VMs streamlines the process and the risk to data is reduced.

Organizations are adopting cloud architectures to help maximize the flexibility, scalability and utilization of compute and storage resources within their data centers. The traditional software defined storage (SDS) cloud model based on DAS architectures is not as efficient for sharing as the disaggregated approach, resulting in lower compute and storage capacity utilization, and a reduction in overall economic performance (Figure 3).

When the NVMe-oF protocol is added to the mix, the limitations and inefficiencies associated with DAS architectures are better handled by disaggregating the high-performance NVMe SSDs from the compute nodes and making them shareable across a network.

Final Thoughts

The next-generation of performance-centric and latency-sensitive applications are now integral to today’s cloud infrastructure planning and operations - and this requires IT organizations to rethink their data center storage strategies. Increasingly, these strategies are moving toward a shared infrastructure and cloud orchestration to allocate the right amount of storage and performance for each application workload. As such, disaggregated storage in the cloud is becoming a preferred, cost-efficient model. At the center of this movement is the heightened demand for NVMe-based flash storage and the NVMe-oF framework that makes cloud-based disaggregation a reality.

About the Author: Joel Dedrick is the Vice President and General Manager of KumoScale™ shared accelerated storage software at Toshiba (News - Alert) Memory America, Inc. (TMA) -- a solution that enables the NVMe over Fabrics protocol to make flash storage accessible over a data center network. As an executive technologist, Mr. Dedrick has over 20-years’ experience turning promising technologies into category-leading products for public, private and start-up companies. His areas of expertise include storage, networking, semiconductors and special-purpose computational architectures. Mr. Dedrick earned a Bachelor’s of Science degree in Electrical Engineering from the University of Nebraska, and a Master’s of Science degree in Electrical Engineering, with a digital signal processing (DSP) emphasis, from Southern Methodist University.

Edited by Maurice Nagle